DELA

Document-Level MachinE TransLation EvAluation

Why Document-Level Evaluation?

One of the biggest challenges for Machine Translation (MT) is the ability to handle discourse dependencies and the wider context of a document. The purpose of DELA – Document-level MachinE TransLation EvAluation – is to revolutionize the current practices in the machine translation evaluation field and demonstrate that the time is ripe for switching to document-level assessments.

Increasing efforts have been made in order to add discourse into neural machine translation (NMT) systems. However, the results reported for those attempts are somehow limited as the evaluation is still mostly performed at the sentence level, using single references, which are not able to recognise the improvements of those systems.

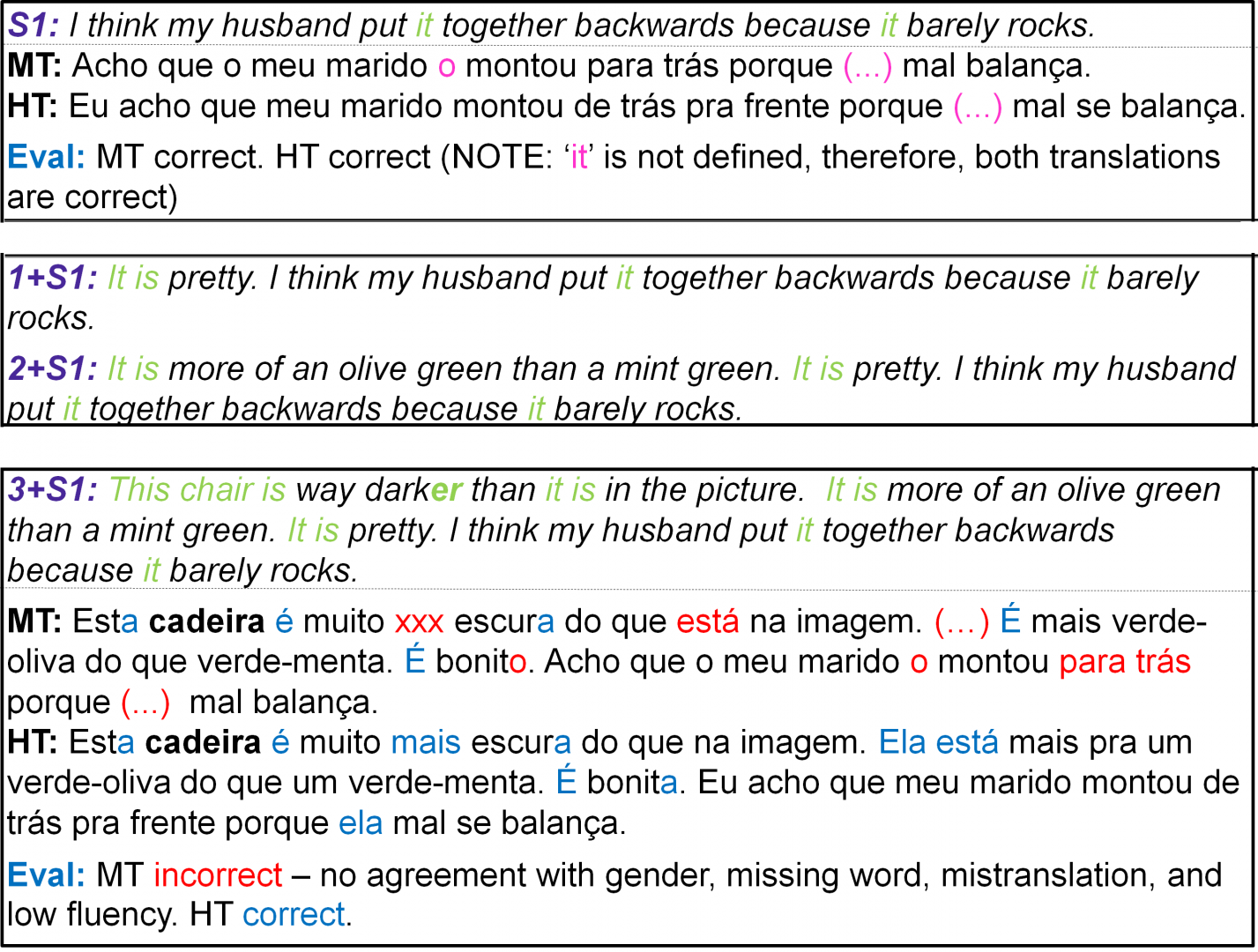

By assessing translation with document-level evaluation, it is possible to assess suprasentential context, textual cohesion and coherence types of errors (such as mistranslation of ambiguous words, gender and number agreement, etc.), which is impossible at times to be recognized in sentence level.

Misevaluations Example

Objectives

The main objective of the DELA project is to test the existing human and automatic sentence-level metrics to the document-level and define best practices for document-level machine translation evaluation. DELA will also gather translators’ requirements to design a translation evaluation tool which will provide an environment for translators to assess MT quality at a document-level with human evaluation metrics. In addition, the tool will offer automatic evaluation metrics scores specified as best suited for document-level evaluation in the project.

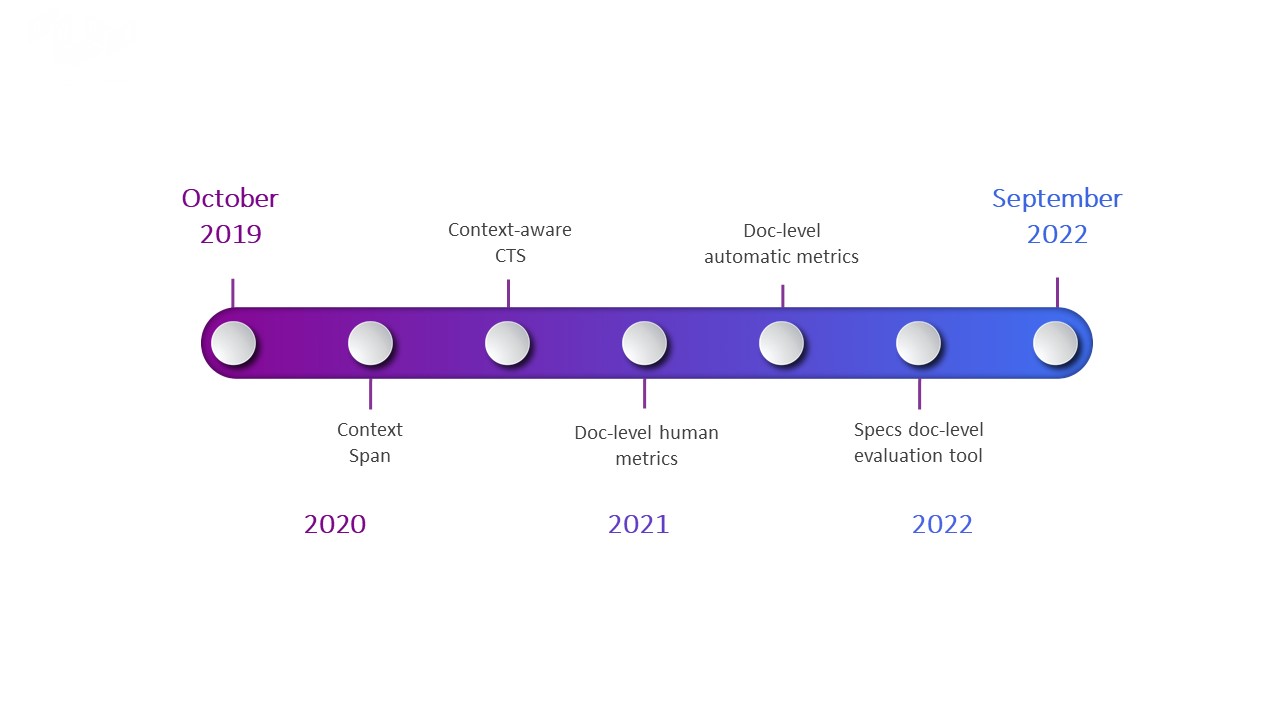

- Key work packages that will be conducted in DELA consist of:

- WP1 – Testing context span for document-level evaluation

- WP2 – Construction of context-aware challenge test sets

- WP3 – New-generation document-level human evaluation metrics

- WP4 – New-generation document-level automatic evaluation metrics

- WP5 – Specifications for document-level evaluation tool

-

Timeline

Publications:

Castilho, S., Cavalheiro Camargo, J. L., Menezes, M., and Way, A. (2021). Dela corpus-a document-level corpus annotated with context-related issues. In Proceedings of the Sixth Conference on Machine Translation, pages 571–582. Association for Computational Linguistics (ACL), November.

Here are also some publications from pilot experiments that derived from DELA:

Sheila Castilho, Maja Popovic, and Andy Way. 2020. On context span needed for machine translation evaluation. In Proceedings of The 12th Language Resources and Evaluation Conference, pages 3735–3742.

Awards:

Sheila Castilho won Researcher of the Year in the ADAPT Recognition Awards 2021, for her impactful work on the IRC fellowship and in Machine Translation. Her work is consistently being recognised by the MT community as seen by her increased citations and her voice sought at impressive conferences.

Check out Dr. Sheila’s Social Media profiles for the latest updates:

-

Prof Andy Wayandy.way@adaptcentre.ie

Download PDF

-

DELA stands for Document-Level MachinE TransLation EvAluation

DELA is an Irish Research Council-funded (ERC) project, under the Postdoctoral fellowship scheme, awarded to Dr. Sheila Castilho. It will run from the 1st of October 2020 to the 30th of September 2022. The project aims at finding new ways to evaluate machine translation with context.